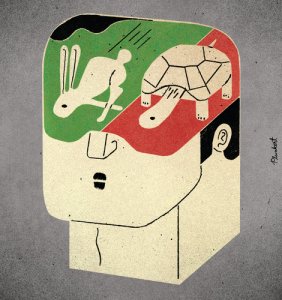

Intelligent gossip at the water cooler. That’s the ultimate goal for Daniel Kahneman, author of the widely praised Thinking, Fast and Slow and recipient of the Nobel Prize in Economics.  People are not rational, but rather not-quite-rational human beings, their behaviour driven by two inner “agents”: system 1 and system 2. System 1 acts automatically and quickly, works with little or no effort and without any sense of voluntary control. Unfortunately, it isn’t particularly strong in logic and statistics. System 2 corresponds with who we think we are. It is responsible for our effortful mental activities and can override the intuitive conclusions from system 1. People are prone to cognitive biases and faulty decision-making, because system 1 kicks in before the more rational and logical system 2 is engaged.

People are not rational, but rather not-quite-rational human beings, their behaviour driven by two inner “agents”: system 1 and system 2. System 1 acts automatically and quickly, works with little or no effort and without any sense of voluntary control. Unfortunately, it isn’t particularly strong in logic and statistics. System 2 corresponds with who we think we are. It is responsible for our effortful mental activities and can override the intuitive conclusions from system 1. People are prone to cognitive biases and faulty decision-making, because system 1 kicks in before the more rational and logical system 2 is engaged.

“Although System 2 believes itself to be where the action is, the automatic System 1 is the hero of this book.”

Most of the time, we rely on the mental shortcuts of our system 1. It is inexhaustible, unlike the limited capacity of system 2. Paying attention can actually be taken quite literally. Ego Depletion not only occurs through mental effort, but also through emotional temptations. Kahneman recalls the Oreo Experiment where 4-year old children’s ability to resist the immediate temptation for one Oreo biscuit for a two Oreos after 10 minutes turned out to strongly predict later success in life.

In the book, Kahneman vividly describes a wide range of systematic errors in our judgements and choices. Rather than rational beings, our judgements and choices are affected by a whole variety of biases and fallacies. An important characteristic of our system 1 is basing decisions on information that we see or that is immediately available. “What you see, is all there is” (WYSIATI). It explains availability bias, the tendency to overweigh personal experiences, dramatic events (plane crashes!) and things that attract media coverage. WYSIATI explains priming and halo effects. The halo effect is our tendency to give a disproportionately high weight to first impressions such as a handsome and confident speaker. The effect of recent exposure to information on our judgement is the priming effect. It explains how volunteers walk more slowly down a corridor after seeing words related to old age, or fare better in general-knowledge tests after writing down the attributes of a typical professor. Anchoring is a form of priming explains why it’s a bad idea to let the salesman set the starting price when bargaining. Overconfidence is another manifestation of WYSIATI. When we estimate a quantity, we rely on information that comes to mind, neglect what we don’t know and construct a coherent story in which our estimate makes sense. 90% of car drivers think they are better than average. We also focus on what we want and can do, neglecting the plans and skills of others. Add to this the fact that uncertainty is not socially acceptable for a “professional”. Another way our lazy System 1 operates is through substitution. It replaces a target question with a heuristic question. “How happy are you in life?” becomes “What is my mood right now?”

Our system 1 is pretty bad in statistics, especially in calculating probabilities. The Law of Small Numbers refers to our tendency to ignore that small samples are much more likely to generate extreme outcomes. It explains our bias to pay more attention to content than its reliability (sample sizes are rarely mentioned). Another example is base rate neglect (planning fallacy). A good example is that people, when given a person’s character description that resembles a librarian, are likely to think that that person will be a librarian, rather than a teacher, without accounting for the fact that there are much more teachers than librarians. Base rate neglect means that we often exaggerate intuitive impressions of diagnosticity, rather than sticking close to the base rate. Another example is confuse the probably with the plausible. Our associate machinery likes to construct plausible stories from past events, rather than admitting it was all just a coincidence. Adding detail to a description makes it more plausible, but less probable. Upon reading a description of a woman called Linda, subjects rated it more likely that Linda was a bank teller who is active in the feminist movement than that Linda was a bank teller.

I am particularly fond of this example [the Linda problem] because I know that the [conjoint] statement is least probable, yet a little homunculus in my head continues to jump up and down, shouting at me—“but she can’t just be a bank teller; read the description.” source

Stephen Jay Gould

Our unwillingness to deduce the particular from the general is only matched by our willingness to infer the general from the particular. Whether it’s about helping people in need or stock picking, when confronted with statistics, people are very reluctant to accept them, claiming they are different. Conversely, coherence bias makes that people are very quick to draw general conclusions from events that happen in their neighbourhood. WYSIATI!

People who claim to rely on their intuition are using their System 1. People who are powerful (or made believe that they’re powerful), knowledgeable novices, in a good mood and have had a few successes are more likely to rely on their intuition. Intuition makes only sense in a predictable environment and where skills are learnable through prolonged practice and lots of feedback. Chess is an example. Stock picking is not a good example, as it’s a low validity environment. When asked for his favourite formula by Edge, Kahneman chose:

Success = talent + luck

Great success = little more talent + a lot more luck

We tend to overestimate talent and underestimate the role of luck. Regression to the mean explains why highly intelligent women will marry less intelligent men and why winners of the ‘Golden Ball’ are likely to perform worse the year after. A great achievement or misfortune is due to luck more than anything else and is unlikely to be repeated the year after. Nassim Taleb speaks about the illusion of validity, the tendency to create ‘stories’ that ‘make sense’.

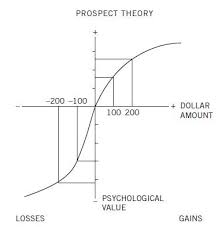

Prospect theory (for which Kahneman was awarded the Nobel) shows how choices we make are based on utilities rather than expected values. We respond stronger to losses than to gains (most people twice as strong).  Endowment effects make that the coffee mug we get for free, becomes more valuable once we have it. It shows how we overweight (or completely neglect) unlikely events (possibility effects) and how a small probability of no success reduces its utility value disproportionally. We are risk averse for our gains and risk seeking for our losses. This explains how we keep buying lottery tickets and how insurance companies make profits. In stock trading, we tend to sell winners and keep losers. Often, it’s better to cut your losses, rather than waiting and hoping for breaking-even. The best way to deal with our fallacies in low validity environments is to rely on formulas, standardized factual questions and to avoid inventing ‘broken legs’ (exceptions to your rules).

Endowment effects make that the coffee mug we get for free, becomes more valuable once we have it. It shows how we overweight (or completely neglect) unlikely events (possibility effects) and how a small probability of no success reduces its utility value disproportionally. We are risk averse for our gains and risk seeking for our losses. This explains how we keep buying lottery tickets and how insurance companies make profits. In stock trading, we tend to sell winners and keep losers. Often, it’s better to cut your losses, rather than waiting and hoping for breaking-even. The best way to deal with our fallacies in low validity environments is to rely on formulas, standardized factual questions and to avoid inventing ‘broken legs’ (exceptions to your rules).

If that’s not enough, our memory, upon we base our decisions, suffers from quirks such as duration neglect and peak-end rule. These were strikingly illustrated in one of Kahneman’s more harrowing experiments. Two groups of patients were to undergo painful colonoscopies. The patients in Group A got the normal procedure. So did the patients in Group B, except a few extra minutes of mild discomfort were added after the end of the examination. Which group suffered more? Well, Group B endured all the pain that Group A did, and then some. But since the prolonging of Group B’s colonoscopies meant that the procedure ended less painfully, the patients in this group retrospectively minded it less.

As with colonoscopies, so is it with life. It is the remembering self that calls the shots, not the experiencing self. Our memory of our vacations is determined by the peak-end rule, not by how fun (or miserable) it actually was moment by moment.

“Odd as it may seem, I am my remembering self, and the experiencing self, who does my livin

g, is like a stranger to me.”

Are we really so hopeless? We can’t turn off our system 1 and Kahneman acknowledges that he’s just as prone to its pitfalls as anyone else. However, being aware of biases and fallacies helps to recognise situations where it’s a good idea to sit down and involve your system 2. There are some useful techniques as well. Before taking an important decision, it’s a good idea to organise a pre-mortem meeting, a thought experiment where participants imagine they are a year into the future and implemented the plan as presented. However, the outcome was a disaster and participants write a brief history of that disaster. Pre-mortems help to legitimise doubt and encourage even supporters of the decision to search for possible threats.

Findings of behavioural economics find their way into policy and our daily life. Both the UK and the US have

a government unit dedicated to applying its principles into policy making. It helps explaining why personal stories trump numbers in global development and why education should focus on educating learners to be disciplined thinkers with knowledge of decision-making skills and principles of probability, choice theory and statistics.

Everyone should read Thinking, Fast and Slow. It’s an astonishingly rich book: lucid, profound, full of intellectual surprises and self-help value.