A recommended education blog is that of Donald Clark. In various blog posts, he debunks some popular educational theories, such as learning styles, Kirkpatrick 4-levels of evaluation, left-right brain people and hot air-selling educational gurus such as Ken Livingstone and Sugata Mitra. James Atherton formulates it perfectly:

So often in education, shallow unsubstantiated TED talks replace the real work of researchers and those who take a more rigorous view of evidence. Sir Ken Robinson, is, I suspect, the prime example of this romantic theorising, Sugata Mitra the second. Darlings of the conference circuit, they make millions from talks but do untold damage when it comes to the real word and the education of our children.’

Like in management, popular but unsubstantiated theories seem to be a predicament of education, where research struggles to find its way to the classroom and where consultants make a nice buck selling these theories to a captive teacher professional development public.

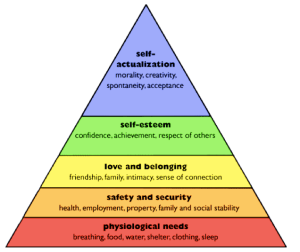

First, Maslow himself updated his model in 1970, but this updated model hardly found its way into the professional development circuit. Second, the model doesn’t stand the test of basic scientific scrutiny:

Although hugely influential, his work was never tested experimentally at the time and when it was, from the 70s onwards, was found wanting. Empirical evidence showed no real evidence in terms of a strict hierarchy, not the categories, as defined by Maslow.

The self-actualisation theory is now regarded as having no real value as it is wholly subjective. The problem is his slapdash use of evidence. Self-actualised people are selected by him then used as evidence for self-actualisation.

An even weaker aspect of the theory is its strict hierarchy. It is clear that the higher needs can be fulfilled before the lower needs are satisfied. There are many counter-examples and indeed, creativity can atrophy and die on the back of success. Maslow himself, felt that the lines were not that clear. In short, subsequent research has shown that his hierarchy is crude, as needs are pursued non-hierarchically, often in parallel. A different set of people could be chosen to prove that self-actualisation was the result of, say, trauma or poverty (Van Gogh etc.).

Most sets of indicators for the well being of children are more complex, sophisticated and do not fall into a simple hierarchy. There are many such schemas at international (UNICEF) and national levels. They rarely bear much resemblance to Maslow’s hierarchy.

Indeed, research in economic development in developing countries shows that people frequently prefer investing in things like cellphones, local traditions such as marriages and funeral ceremonies and education, before their basic needs are met.

Extensive research on needs’ fulfillment and social well-being (Tay and Diener, 2011) shows little support for Maslow’s hypothesis:

Our analyses reveal that as hypothesized by Maslow, people tend to achieve basic and safety needs before other needs. However, fulfilling the various needs has relatively independent effects on SWB (Societal Well Being). For example, a person can gain wellbeing by meeting psychosocial needs regardless of whether his or her basic needs are fully met.

Another implication of our findings is that need fulfillment needs to be achieved at the societal level, not simply at the individual level. Although Maslow focused on individuals, we found that there are societal effects as well. It helps one’s SWB if others in one’s nation have their needs fulfilled.

More rigour in teacher professional development is certainly needed. Frustratingly, in our first workshop in South Africa, university (!) lecturers came in with their materials on left-right brains and learning styles. On the positive side, it helps to weed out the lazy or incompetent providers from the quality ones.

![disruptive-innovation[1]](https://stefeducation.files.wordpress.com/2013/02/disruptive-innovation1.gif?w=300&h=300)